Write string.h functions using string instructions in asm x86-64

Introduction

The C standard library offers a bunch of functions (whose declarations can be found in the string.h header) to manage NULL-terminated strings and arrays. These are some of the most used C functions, often implemented as builtin by the C compiler, as they are crucial to the speed of programs.

On the other hand, the x86 architecture contains “string instructions”, aimed at implementing operations on strings at the hardware level. Moreover, the x86 architecture was incrementally enhanced with SIMD instructions over the years, allowing for the processing of multiple bytes of data in a single instruction.

In this article, we’ll inspect the implementation of string.h of the GNU standard library for x86, and see how it compares with a pure assembly implementation of these functions using string instructions and SIMD, and try to explain the choices made by the GNU developers and help you write better assembly.

Disassembling a call to memcpy

One of the most popular C functions is memcpy.

It copies an array of bytes to another, which is a very common operation and makes its performance particularly important.

There are several ways you can perform this operation using x86 asm. Let’s see how it is implemented by gcc using this simple C program:

#include <string.h>

#define BUF_LEN 1024

char a[BUF_LEN];

char b[BUF_LEN];

int main(void) {

memcpy(b, a, BUF_LEN);

return EXIT_SUCCESS;

}We can observe the generated asm by using godbolt.

Or compile the code using gcc 14.2: gcc -O1 -g -o string main.c

And then disassemble the executable using:

objdump --source-comment="; " --disassembler-color=extended --disassembler-options=intel --no-show-raw-insn --disassemble=main stringYou should get this result:

0000000000401134 <main>:

;

; int main(int argc, char *argv[]) {

; memcpy(b, a, BUF_LEN);

401134: mov esi,0x404440

401139: mov edi,0x404040

40113e: mov ecx,0x80

401143: rep movs QWORD PTR es:[rdi],QWORD PTR ds:[rsi]

; return 0;

; }

401146: mov eax,0x0

40114b: retThe first surprising thing you notice is that the machine code does not contain any call to the memcpy function.

It has been replaced by 3 mov instructions preceding a mysterious rep movsq instruction.

rep movsq is one of the five string instructions defined in the “Intel® 64 and IA-32 Architectures Software Developer’s Manual - Volume 1: Basic Architecture 5.1.8”.

So it is time to learn more about these string instructions.

The string instructions of x86

String instructions perform operations on array elements pointed by rsi (source register) and rdi (destination register).

| instruction | Description | Effect on registers |

|---|---|---|

| movs | Move string | *(rdi++) = *(rsi++) |

| cmps | Compare string | cmp *(rsi++), *(rdi++) |

| scas | Scan string | cmp rax, *(rdi++) |

| lods | Load string | rax = *(rsi++) |

| stos | Store string | *(rdi++) = rax |

Each of these instructions must have a suffix (b,w,d,q) indicating the type of elements pointed by rdi and rsi (byte, word, doubleword, quadword).

These instructions may also have a prefix indicating how to repeat themselves.

| prefix | Description | Effect on registers |

|---|---|---|

| rep | Repeat while the ECX register not zero | for(; rcx != 0; rcx–) |

| repe/repz | Repeat while the ECX register not zero and the ZF flag is set | for(; rcx != 0 && ZF == true; rcx–) |

| repne/repnz | Repeat while the ECX register not zero and the ZF flag is clear | for(; rcx != 0 && ZF == false; rcx–) |

The repe/repz and repne/repnz prefixes are used only with the cmps and scas instructions (as they are the only ones modifying the RFLAGS register).

The movs instruction

Now that we have learned more about the string instructions, we can break down the effect of the rep movsq instruction:

- Copy the quadword pointed by

rsitordi - Add 8 to

rsiandrdiso that they point onto the next quadword - Decrement

rcxand repeat untilrcx == 0

This is what we would expect memcpy to do, except for one thing: bytes are not copied one by one, but in blocks of 8. Here, as the byte size of our arrays is a multiple of 8, we can copy the source array as an array of quadwords. This will necessitate 8 times fewer operations than copying the array one byte at a time.

Let’s change the size of the arrays to 1023 to see how the compiler will react when the array size is not a multiple of 8 anymore:

0000000000401134 <main>:

;

; int main(int argc, char *argv[]) {

; memcpy(b, a, BUF_LEN);

401134: mov esi,0x404440

401139: mov edi,0x404040

40113e: mov ecx,0x7f

401143: rep movs QWORD PTR es:[rdi],QWORD PTR ds:[rsi]

401146: mov eax,DWORD PTR [rsi]

401148: mov DWORD PTR [rdi],eax

40114a: movzx eax,WORD PTR [rip+0x36eb] # 40483c <a+0x3fc>

401151: mov WORD PTR [rip+0x32e4],ax # 40443c <b+0x3fc>

401158: movzx eax,BYTE PTR [rip+0x36df] # 40483e <a+0x3fe>

40115f: mov BYTE PTR [rip+0x32d9],al # 40443e <b+0x3fe>

; return 0;

; }

401165: mov eax,0x0

40116a: retInstead of replacing the rep movsq by the rep movsb instruction, gcc preferred to stop the repetition of the movsq instruction 8 bytes earlier and add mov instructions to copy a doubleword, a word, and a byte.

The cmps instruction

The cmps instruction will compare the elements pointed by rsi and rdi and will set the flag accordingly.

As cmps will set the ZF flag, we can use the repe/repz and repne/repnz prefixes to, respectively, continue until the strings differ or stop when matching characters are encountered.

Let’s write a basic memcmp function using this instruction:

; int memcmp_cmpsb(rdi: const void s1[.n], rsi: const void s2[.n], rdx: size_t n);

memcmp:

mov rcx, rdx ; rcx = n

xor eax, eax ; Set return value to zero

xor edx, edx ; rdx = 0

repe cmpsb ; for(; rcx != 0 and ZF == true; rcx--)

; cmp *(rsi++), *(rdi++)

setb al ; if(ZF == false and CF == true) al = 1

seta dl ; if(ZF == false and CF == false) bl = 1

sub eax, edx ; return al - dl

.exit

retWe use the repe cmpsb instruction to iterate over the strings s1 and s2 until two bytes differ.

When we exit the repe cmpsb instruction, the RFLAGS register is set according to the last byte comparison. We can then use the set[cc] instructions to set bytes al and dl according to the result comparison.

In the same way, the memcpy function copies groups of 8 bytes; we can use the repe cmpsq instruction to compare bytes in groups of 8 (or cmpsd for groups of 4 bytes on 32-bit architectures).

; int memcmp_cmpsq_unaligned(rdi: const void s1[.n], rsi: const void s2[.n], rdx: size_t n);

memcmp_cmpsq_unaligned:

lea rcx, [rdx + 0x7] ; rcx = n

and rdx, (8 - 1) ; rdx = n % 8

shr rcx, 3 ; rcx = n / 8

xor eax, eax ; rax = 0

repe cmpsq ; for(; rcx != 0 and ZF == true; rcx += 8)

; cmp *(rsi++), *(rdi++)

je .exit ; If no difference was found return

mov r8, [rdi - 0x8] ; Read the last (unaligned) quadword of s1

mov r9, [rsi - 0x8] ; Read the last (unaligned) quadword of s2

test rcx, rcx ; if(rcx != 0)

jnz .cmp ; goto .cmp

shl rdx, 3 ; rdx = 8 * (8 - n % 8)

jz .cmp ; if(rdx == 0) goto .cmp

bzhi r8, r8, rdx ; r8 <<= 8 * (8 - n % 8)

bzhi r9, r9, rdx ; r9 <<= 8 * (8 - n % 8)

.cmp:

bswap r8 ; Convert r8 to big-endian for lexical comparison

bswap r9 ; Convert r9 to big-endian for lexical comparison

cmp r8, r9 ; Lexical comparison of quadwords

seta al ; if (r8 > r9) al = 1

setb cl ; if (r8 < r9) cl = 1

sub eax, ecx ; return eax - ecx

.exit:

retTo get the result of the comparison, we need to compare the last two quadwords. However, on little-endian systems, the lowest significant byte will be the first one, and we want to compare the byte in lexical order. Hence, the need to convert the quadword to big-endian using the bswap instruction.

The instruction bzhi is useful when you need to mask out the higher bits of a register.

Here, when comparing the last quadword, we need to erase all bits in r8 and r9 that aren’t “valid” (i.e. which are not part of the input arrays).

You can find documentation about this instruction here.

This function should only be used for blocks of memory of size multiples of 8 with 8-byte alignment.

For production use, refer to the Benchmarking section.

The scas instruction

The scas instruction will compare the content of rax with the element pointed by rdi and set the flag accordingly.

We can use it in a similar way to what we did for cmps, taking advantage of the repe/repz and repne/repnz prefixes.

Let’s write a simple strlen function using the scasb instruction:

; size_t strlen(rdi: const char *s)

strlen:

mov rcx, -1

repnz scasb ; for(; rcx != 0 and ZF == false; rcx--)

; cmp rax, *(rdi++)

not rcx ; before this insn rcx = - (len(rdi) + 2)

dec rcx ; after this insn rcx = ~(- (len(rdi) + 2)) - 1

; = -(- (len(rdi) + 2)) - 1 - 1

; = len(rdi)

xchg rax, rcx ; rax = len(rdi)

retDon’t use this function for production code, as it can only compare bytes one by one.

For production, always prefer a loop alternative to compare groups of bytes using the largest registers (see the Benchmarking section).

The lods instruction

The lods instruction will load to the rax register the element pointed to by rsi and increment rsi to point to the next element.

As this instruction does nothing but set register, it is never used with a prefix (the value would be overwritten for each repetition).

It can, however, be used to examine a string, for instance, to find a character:

; char* strchr_lodsb(rdi: const char* s, rsi: int c)

strchr_lodsb:

xchg rdi, rsi ; rdi = c, rsi = s

.loop:

lodsb ; al = *(rsi++)

cmp dil, al ; if(c == al)

je .end ; goto .end

test al, al ; if(al != 0)

jnz .loop ; goto .loop

xor rax, rax ; return 0

ret

.end:

lea rax, [rsi - 1] ; return rsi - 1

retThe stos instruction

The stos instruction will write the content of the rax register to the element pointed by rdi and increment rdi to point to the next element.

Note that according to the “Intel® 64 and IA-32 Architectures Software Developer’s Manual - Volume 1: Basic Architecture 7.3.9.2”:

a REP STOS instruction is the fastest way to initialize a large block of memory.

Actually, this is the way gcc will implement a memset when it knows the size and alignment of the string:

#include <string.h>

#define BUF_LEN 1024

char a[BUF_LEN];

int main(int argc, char *argv[]) {

memset(a, 1, BUF_LEN);

return 0;

}000000000040115a <main>:

;

; int main(int argc, char *argv[]) {

; memset(a, 1, BUF_LEN);

40115a: mov edx,0x404460

40115f: mov ecx,0x80

401164: movabs rax,0x101010101010101

40116e: mov rdi,rdx

401171: rep stos QWORD PTR es:[rdi],rax

; return 0;

; }

401174: mov eax,0x0

401179: retAlways use the rep movsq instruction to initialize large blocks of memory.

For small blocks of memory, use an unrolled loop and the largest registers.

Let’s turn around

The direction flag

It wouldn’t be as much fun if we couldn’t make it backward 😄

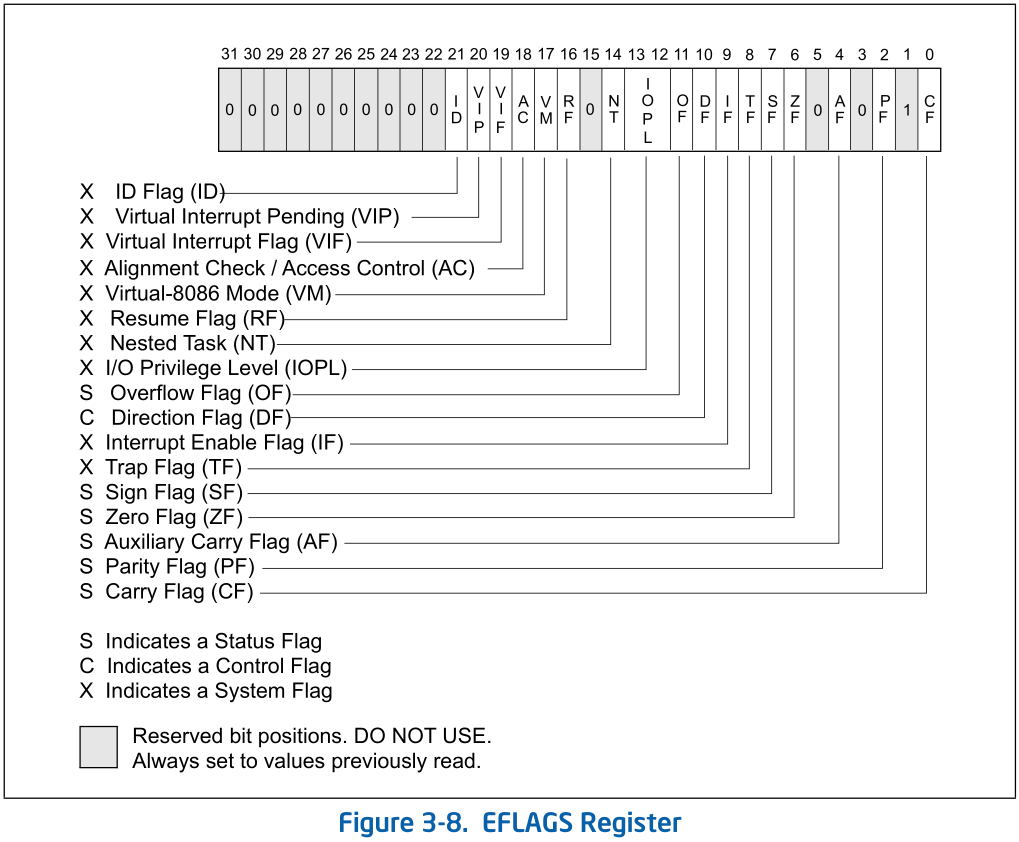

On x86, the flag register (RFLAGS) has a direction flag, RFFLAGS.DF, which controls the direction of the string operations.

This flag can be set and cleared respectively, using the std and cld instructions.

std ; SeT Direction

; Here DF = 1, rdi and rsi are decremented

cld ; CLear Direction

; Here DF = 0, rdi and rsi are incrementedHere you have a detailed view of the RFLAGS register:

On Intel64, the upper 32 bits of the RFLAGS register are reserved, and the lower 32-bits are the same as the EFLAGS register of 32-bit architectures.

The EFLAGS register on Intel64 and IA-32 architectures

Saving and restoring RFLAGS

When you write an assembly subroutine using string instructions, you should always:

- save the state of the RFLAGS register

- set or clear RFLAGS.DF

- do your work

- restore the RFLAGS register

This way, your subroutine will work independently of the state of the direction flag and won’t break other routines relying on this flag.

To do so, you can push RFLAGS to the stack using the pushfq instruction and restore it using the popfq instruction.

However, the System V Application Binary Interface for AMD64 states that:

The direction flag DF in the %rFLAGS register must be clear (set to “forward” direction) on function entry and return.

So, if you’re writing code targeting the System V ABI, you may assume that the direction flag is clear and ensure that you keep it clear when leaving your functions.

An example with strrchr

We can code a simple version of strrchr ( which looks for the last occurrence of a character in a string) based on our strchr function by simply setting rdi = s + len(s) + 1 and by setting the direction flag.

; char *strrchr(rdi: const char *s, esi: int c);

strchr:

push rdi ; push 1st arg on the stack

push rsi ; push 2nd arg on the stack

; rcx = strlen(rdi) + 1

call strlen

mov rcx, rax

add rcx, 1

std ; RFLAGS.DF = 1

pop rax ; rax = c

pop rdi ; rdi = s

add rdi, rcx ; rdi = s + len(s) + 1

xor rdx, rdx ; rdx = 0

repne scasb ; for(; rcx != 0 and ZF == false; rcx--)

; cmp al, *(rdi--)

jne .exit

mov rdx, rdi ; if(ZF == true)

sub rdx, 1 ; rdx = rdi - 1

.exit:

cld ; RFLAGS.DF = 0

mov rax, rdx ; return rdx

retWe use the System V ABI for our functions, so there is no need to save the RFLAGS register, but we make sure to clear the direction flag before returning from the function.

movs and stos instructions, because, as we’ll see in the last part, it may disable a whole class of optimization known as “fast-string operations”.Vectorized string instructions

Vectorized string instructions are quite intricate and should not be used in most cases.

If you’re in to discover one of the strangest instructions of x86 you can continue, but if you prefer to watch graphs, you can skip to the benchmarking section.

Implicit ? Explicit ? Index ? Mask ?

SSE4.2 introduced another set of 4 instructions: The vectorized string instructions.

| instruction | Description |

|---|---|

| pcmpestri | Packed Compare Implicit Length Strings, Return Index |

| pcmpistri | Packed Compare Explicit Length Strings, Return Index |

| pcmpestrm | Packed Compare Implicit Length Strings, Return Mask |

| pcmpeistrm | Packed Compare Explicit Length Strings, Return Mask |

A vectorized string instruction takes three parameters:

pcmpestri xmm1, xmm2/m128, imm8The first and second arguments of the instructions are meant to contain the string fragments to be compared.

The fragments are considered valid until:

- The first null byte for the “Implicit Length” versions

- The length contained in

eax(forxmm1) andedx(forxmm2) for the “Explicit Length” versions

The result is:

- The index of the first match, stored in the

ecxregister for the “Index” version. - A mask of bits/bytes/words (depending on imm8), stored in the

xmm0register for the “Mask” version.

Other information is carried out by the RFLAGS register:

| Flag | Information |

|---|---|

| Carry | Result is non-zero |

| Sign | The first string ends |

| Zero | The second string ends |

| Overflow | Least Significant Bit of result |

The Parity and Adjust flags are reset.

The imm8 control byte

But this doesn’t tell us which comparisons these instructions can perform. Well, they can perform 4 basic operations:

- Find characters from a set: Finds which of the bytes in the second vector operand belong to the set defined by the bytes in the first vector operand, comparing all 256 possible combinations in one operation.

- Find characters in a range: Finds which of the bytes in the second vector operand are within the range defined by the first vector operand.

- Compare strings: Determine if the two strings are identical.

- Substring search: Finds all occurrences of a substring defined by the first vector operand in the second vector operand.

The operation performed by the vectorized string instruction is controlled by the value of the imm8 byte:

| imm8 | Description |

|---|---|

| ·······0b | 128-bit sources treated as 16 packed bytes. |

| ·······1b | 128-bit sources treated as 8 packed words. |

| ······0·b | Packed bytes/words are unsigned. |

| ······1·b | Packed bytes/words are signed. |

| ····00··b | Mode is equal any. |

| ····01··b | Mode is ranges. |

| ····10··b | Mode is equal each. |

| ····11··b | Mode is equal ordered. |

| ···0····b | IntRes1 is unmodified. |

| ···1····b | IntRes1 is negated (1’s complement). |

| ··0·····b | Negation of IntRes1 is for all 16 (8) bits. |

| ··1·····b | Negation of IntRes1 is masked by reg/mem validity. |

| ·0······b | Index: Index of the least significant, set, bit is used (regardless of corresponding input element validity). Mask: IntRes2 is returned in the least significant bits of XMM0. |

| ·1······b | Index: Index of the most significant, set, bit is used (regardless of corresponding input element validity). Mask: Each bit of IntRes2 is expanded to byte/word. |

The MSB of imm8 has no defined effect and should be 0.

This means that if we want to compare xmm1 and xmm2 for byte equality and get the index of the first non-matching byte, we have to set imm8 = 0b0001'1000

We can define some macros so you do not have to remember this:

PACKED_UBYTE equ 0b00

PACKED_UWORD equ 0b01

PACKED_BYTE equ 0b10

PACKED_WORD equ 0b11

CMP_STR_EQU_ANY equ (0b00 << 2)

CMP_STR_EQU_RANGES equ (0b01 << 2)

CMP_STR_EQU_EACH equ (0b10 << 2)

CMP_STR_EQU_ORDERED equ (0b11 << 2)

CMP_STR_INV_ALL equ (0b01 << 4)

CMP_STR_INV_VALID_ONLY equ (0b11 << 4)

CMP_STRI_FIND_LSB_SET equ (0b00 << 6)

CMP_STRI_FIND_MSB_SET equ (0b01 << 6)

CMP_STRM_BIT_MASK equ (0b00 << 6)

CMP_STRM_BYTE_MASK equ (0b01 << 6)From these definitions, we can create the imm8 flag for a vpcmpxstrx instruction with bitwise or.

For instance, a flag for the vpcmpestri instruction to get the index of the first differing byte:

BYTEWISE_CMP equ (PACKED_UBYTE | CMP_STR_EQU_EACH | CMP_STR_INV_VALID_ONLY | CMP_STRI_FIND_LSB_SET)A vectorized memcmp version

Now that we know how to use the vpcmpestri instruction and that we defined the imm8 flag to make a byte-wise comparison of the content of AVX registers, we can write a version of memcmp using the vectorized string instructions.

; int memcmp_vpcmpestri_unaligned(rdi: const void s1[.n], rsi: const void s2[.n], rdx: size_t n);

memcmp_vpcmpestri_unaligned:

xor r10d, r10d

mov rax, rdx ; rax = n

test rdx, rdx ; if(n == 0)

jz .exit ; return n

vmovdqu xmm2, [rdi + r10] ; xmm2 = rdi[r10]

vmovdqu xmm3, [rsi + r10] ; xmm3 = rsi[r10]

.loop:

; Compare xmm2 and xmm3 for equality and write the index of the differing byte in ecx

; rax contains the length of the xmm2 string, rdx contains the length of the xmm3 string

; If all byte are the same rcx = 16

vpcmpestri xmm2, xmm3, BYTEWISE_CMP

test cx, 0x10 ; if(rcx != 16)

jz .end ; break

add r10, 0x10 ; r10 += 10

vmovdqu xmm2, [rdi + r10] ; xmm2 = rdi[r10]

vmovdqu xmm3, [rsi + r10] ; xmm3 = rsi[r10]

sub rdx, 0x10 ; rdx -= 16

ja .loop ; if(rdx > 0) goto .loop

xor eax, eax ; rax = 0

ret ; return

.end:

xor eax, eax ; rax = 0

cmp rcx, rdx ; if(index >= rdx)

jae .exit ; return;

xor edx, edx ; rdx = 0

add r10, rcx ; r10 += index

mov r8b, [rdi + r10] ; r8b = rdi[r10]

mov r9b, [rsi + r10] ; r9b = rsi[r10]

cmp r8b, r9b ; if(r8b > r9b)

seta al ; return 1

setb dl ; else

sub eax, edx ; return -1

.exit:

retBenchmarking our string instructions

Benchmark 1: memcpy

At the beginning of the previous part, we disassembled a call to memcpy to see that it had been inlined with a rep movsq instruction by gcc.

The compiler is able to realize this optimization because the alignment and the size of both arrays are known at compile time.

Let’s add an indirection to the memcpy call so that the compiler can’t rely on this information anymore.

#include <string.h>

#define BUF_LEN 1023

char a[BUF_LEN];

char b[BUF_LEN];

void *copy(void *restrict dest, const void *restrict src, size_t n) {

return memcpy(dest, src, n);

}

int main(int argc, char *argv[]) {

copy(b, a, BUF_LEN);

return 0;

}objdump --source-comment="; " --disassembler-color=extended --disassembler-option=intel-mnemonic --no-show-raw-insn --disassemble=copy string0000000000401126 <copy>:

; #define BUF_LEN 1023

;

; char a[BUF_LEN];

; char b[BUF_LEN];

;

; void *copy(void *restrict dest, const void *restrict src, size_t n) {

401126: sub rsp,0x8

; return memcpy(dest, src, n);

40112a: call 401030 <memcpy@plt>

; }

40112f: add rsp,0x8

401133: retAnother situation in which gcc will produce a proper call to the libc memcpy function is when the target architecture has vector extensions. In this situation, the compiler is aware that the memcpy implementation will use vector instructions, which may be faster than rep movs.

You can test it by adding the flag -march=corei7 to your gcc command to see what code gcc will produce for an architecture with vector extensions (you can see this in godbolt).

We can now compare different assembly versions of memcpy to its glibc implementation.

I wrote 8 versions of a program copying 4MiB of memory using: an unoptimized for loop, the glibc memcpy function, rep movsb, rep movsq, the SSE2 extension, and the AVX and AVX2 extensions.

I also wrote a backward copy to compare the speed of rep movsb when RFLAGS.DF is set.

#include <stddef.h>

__attribute__((optimize("O1"))) void *

memcpy_dummy(void *restrict dst, const void *restrict src, size_t n) {

void *const ret = dst;

for (int i = 0; i < n; i++)

*((char *)dst++) = *((char *)src++);

return ret;

}; void *memcpy_movsb(rdi: const void dst[.n], rsi: void src[.n], rdx: size_t n)

memcpy_movsb:

mov rax, rdi ; rax = dst

mov rcx, rdx ; rcx = n

rep movsb ; for(; rcx != 0; rcx--)

; *(rdi++) = *(rsi++)

ret ; return rax; void *memcpy_movsb_std(rdi: const void dst[.n], rsi: void src[.n], rdx: size_t n)

memcpy_movsb_std:

mov rax, rdi ; rax = dst

std ; Set direction flag

mov rcx, rdx ; rcx = n

sub rdx, 1 ; rdx = n - 1

add rdi, rdx ; rdi = dst + (n - 1)

add rsi, rdx ; rsi = src + (n - 1)

rep movsb ; for(; rcx != 0; rcx--)

; *(rdi)++ = *(rsi++)

cld ; Clear direction flag

ret ; return rax; void *memcpy_movb(rdi: const void dst[.n], rsi: void src[.n], rdx: size_t n)

memcpy_movb:

mov rax, rdi ; Save dst in rax

test rdx, rdx ; if(rdx == 0)

jz .exit ; goto .exit

xor ecx, ecx ; rcx = 0

.loop:

movzx r8d, byte [rsi+rcx] ; r8b = rsi[rdx]

mov byte [rdi+rcx], r8b ; rdi[rcx] = r8b

add rcx, 1 ; rcx++

cmp rcx, rdx ; if(rcx != n)

jne .loop ; goto .loop

.exit:

ret; Macro used to copy less than a quadword

; The macro take two parameters:

; - A register containing the byte count to copy

; - A register containing the current 8-byte aligned offset

%macro memcpy_epilog_qword 2

cmp %1, 4 ; if(r8 < 4)

jb %%word ; goto .word

mov eax, [rsi + %2] ; eax = src[%2]

mov [rdi + %2], eax ; dst[%2] = eax

add %2, 4 ; %2 += 4

sub %1, 4 ; %1 -= 4

%%word:

cmp %1, 2 ; if(r8 < 2)

jb %%byte ; goto .byte

movzx eax, word [rsi + %2] ; ax = src[%2]

mov [rdi + %2], ax ; dst[%2] = ax

add %2, 2 ; %2 += 2

sub %1, 2 ; %1 -= 2

%%byte:

test %1, %1 ; if(r8 == 0)

jz %%exit ; goto .exit

movzx eax, byte [rsi + %2] ; al = src[%2]

mov [rdi + %2], al ; dst[%2] = al

%%exit:

%endmacro

; void *memcpy_movsq(rdi: const void dst[.n], rsi: void src[.n], rdx: size_t n)

memcpy_movsq:

push rdi ; rax = dst

cmp rdx, 8 ; if(n < 8)

jb .end ; goto .end

lea rcx, [rsi + 8] ; rcx = src + 8

movsq ; Copy first quadword

and rsi, -8 ; rsi = align(src + 8, 8)

sub rcx, rsi ; rcx = (src + 8) - align(src + 8, 8)

sub rdi, rcx ; rdi = (dst + 8) - ((src + 8) - align(src + 8, 8))

lea rdx, [rdx + rcx - 8] ; rdx = n - (8 - ((src + 8) - align(src + 8, 8)))

mov rcx, rdx ; rcx = n - (align(src + 8, 8) - src)

and rcx, -8 ; rcx = align(n - (align(src + 8, 8) - src), 8)

shr rcx, 3 ; rcx = align(n - (align(src + 8, 8) - src), 8) / 8

rep movsq ; for(; rcx != 0; rcx--)

; *(rdi++) = *(rsi++)

and rdx, (8 - 1) ; rdx = n - (align(src + 8, 8) - src) % 8

.end:

xor ecx, ecx

memcpy_epilog_qword rdx, rcx

.exit:

pop rax

ret; void *memcpy_movq(rdi: const void dst[.n], rsi: void src[.n], rdx: size_t n)

memcpy_movq:

xor ecx, ecx ; rcx = 0

mov r9, rdx ; r9 = n

cmp r9, 8 ; if(n < 8)

jb .end ; goto .end

mov r8, [rsi] ; r8 = *(rsi)

mov [rdi], r8 ; *(rsi) = r8

lea rcx, [rdi + 7] ; rcx = dst + 7

and rcx, -8 ; rcx = align((dst + 7), 8)

sub rcx, rdi ; rcx = align((dst + 7), 8) - dst

sub r9, rcx ; r9 = dst + n - align((dst + 7), 8)

.loop:

mov rax, [rsi + rcx] ; r8 = src[rcx]

mov [rdi + rcx], rax ; dst[rcx] = r8

add rcx, 8 ; rcx += 8

sub r9, 8 ; r9 -= 8

cmp r9, 8 ; if(r9 >= 8)

jae .loop ; goto .loop

.end:

memcpy_epilog_qword r9, rcx

mov rax, rdi ; return dst

ret; Macro used to copy less than a 16 bytes

; The macro take two parameters:

; - A register containing the byte count to copy

; - A register containing the current 16-byte aligned offset

%macro memcpy_epilog_avx 2

cmp %1, 8

jb %%dword

mov rax, [rsi + %2]

mov [rdi + %2], rax

add %2, 8

sub %1, 8

%%dword:

memcpy_epilog_qword %1, %2

%endmacro

; void *memcpy_avx(rdi: const void dst[.n], rsi: void src[.n], rdx: size_t n)

memcpy_avx:

xor ecx, ecx ; rcx = 0

cmp rdx, 16 ; if(n < 16)

jb .end ; goto .end

vmovdqu xmm0, [rsi] ; Copy the first

vmovdqu [rdi], xmm0 ; 32 bytes

lea rcx, [rsi + 15] ; rcx = src + 15

and rcx, -16 ; rcx = align((src + 15), 16)

sub rcx, rsi ; rcx = align((src + 15), 16) - src

sub rdx, rcx ; rdx = src + n - align((src + 15), 16)

.loop:

vmovdqa xmm0, [rsi + rcx] ; xmm0 = rsi[rcx]

vmovdqu [rdi + rcx], xmm0 ; rdi[rcx] = xmm0

add rcx, 16 ; rcx += 16

sub rdx, 16 ; rdx -= 16

cmp rdx, 16 ; if(rdx >= 16)

jae .loop ; goto .loop

.end:

memcpy_epilog_avx rdx, rcx

mov rax, rdi

ret; Macro used to copy less than a 32 bytes

; The macro take two parameters:

; - A register containing the byte count to copy

; - A register containing the current 32-byte aligned offset

%macro memcpy_epilog_avx2 2

cmp %1, 16

jb %%qword

movdqa xmm0, [rsi + %2]

movdqu [rdi + %2], xmm0

add %2, 16

sub %1, 16

%%qword:

memcpy_epilog_avx %1, %2

%endmacro

; void *memcpy_avx2(rdi: const void dst[.n], rsi: void src[.n], rdx: size_t n)

memcpy_avx2:

xor ecx, ecx ; rcx = 0

mov r9, rdx ; r9 = n

cmp r9, 32 ; if(n < 16)

jb .end ; goto .end

vmovdqu ymm0, [rsi] ; Copy the first

vmovdqu [rdi], ymm0 ; 32 bytes

lea rcx, [rsi + 31] ; rcx = src + 31

and rcx, -32 ; rcx = align((src + 31), 32)

sub rcx, rsi ; rcx = align((src + 31), 32) - src

sub r9, rcx ; r9 = src + n - align((src + 31), 32)

.loop:

vmovdqa ymm0, [rsi + rcx] ; xmm0 = rsi[rcx]

vmovdqu [rdi + rcx], ymm0 ; rdi[rcx] = xmm0

add rcx, 32 ; rcx += 16

sub r9, 32 ; r9 -= 16

cmp r9, 32 ; if(r9 >= 16)

jae .loop ; goto .loop

.end:

memcpy_epilog_avx2 r9, rcx

mov rax, rdi

vzeroupper

retNote that for the “dummy version” I forced the optimization level to -O1.

Otherwise, gcc would replace the call to our custom copy function with a call to memcpy (when -O2) or write a vectorized loop using SSE2 extension (when -O3). You can check this in godbolt by changing the level of optimization.

This means that when writing casual C code with -O2 or -O3 level optimization, a simple for loop will often be identical or more efficient than a call to memcpy.

Here are the results I got on my “13th Gen Intel(R) Core(TM) i7-1355U (12) @ 5.00 GHz” using the b63 micro-benchmarking tool:

On my hardware, all the implementations are reasonably close, the slowest by far being the backward copy (setting RFLAGS.DF), the for loop, and the movb version (which copies only one byte at a time), but you may have different results depending on your CPU.

This is an example of string instructions being nearly as fast as copying using the greatest registers of the processor.

According to “Optimizing subroutines in assembly language”:

REP MOVSD and REP STOSD are quite fast if the repeat count is not too small. The largest word size (DWORD in 32-bit mode, QWORD in 64-bit mode) is preferred. Both source and destination should be aligned by the word size or better. In many cases, however, it is faster to use vector registers. Moving data in the largest available registers is faster than REP MOVSD and REP STOSD in most cases, especially on older processors.

The speed of rep movs and rep stos relies heavily on fast-string operations, which need certain processor-dependent conditions to be met.

The “Intel® 64 and IA-32 Architectures Software Developer’s Manual - Volume 1: Basic Architecture 7.3.9.3” defines fast-string operations:

To improve performance, more recent processors support modifications to the processor’s operation during the string store operations initiated with the MOVS, MOVSB, STOS, and STOSB instructions. This optimized operation, called fast-string operation, is used when the execution of one of those instructions meets certain initial conditions (see below). Instructions using fast-string operation effectively operate on the string in groups that may include multiple elements of the native data size (byte, word, doubleword, or quadword).

The general conditions for fast-string operations to happen are:

- The count of bytes must be high.

- Both source and destination must be aligned (on the size of the greatest registers you have).

- The direction must be forward.

- The distance between source and destination must be at least the cache line size.

- The memory type for both source and destination must be either write-back or write-combining (you can normally assume the latter condition is met).

So, how does the glibc implement the memcpy function?

We have two ways to know how the memcpy works underneath. The first one is looking at the source code of the glibc, the second one is to dump the disassembled machine code in gdb.

We can get the code of glibc v.2.40, the GNU implementation of libc, and verify its signature using these commands:

wget https://ftp.gnu.org/gnu/glibc/glibc-2.40.tar.xz https://ftp.gnu.org/gnu/glibc/glibc-2.40.tar.xz.sig

gpg --recv-keys 7273542B39962DF7B299931416792B4EA25340F8

gpg --verify glibc-2.40.tar.xz.sig glibc-2.40.tar.xz

tar xvf glibc-2.40.tar.xzYou can find the implementation of the memcpy function in the string/memcpy.c file:

/* Copy memory to memory until the specified number of bytes

has been copied. Overlap is NOT handled correctly.

Copyright (C) 1991-2024 Free Software Foundation, Inc.

This file is part of the GNU C Library.

The GNU C Library is free software; you can redistribute it and/or

modify it under the terms of the GNU Lesser General Public

License as published by the Free Software Foundation; either

version 2.1 of the License, or (at your option) any later version.

The GNU C Library is distributed in the hope that it will be useful,

but WITHOUT ANY WARRANTY; without even the implied warranty of

MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU

Lesser General Public License for more details.

You should have received a copy of the GNU Lesser General Public

License along with the GNU C Library; if not, see

<https://www.gnu.org/licenses/>. */

#include <string.h>

#include <memcopy.h>

#ifndef MEMCPY

# define MEMCPY memcpy

#endif

void *

MEMCPY (void *dstpp, const void *srcpp, size_t len)

{

unsigned long int dstp = (long int) dstpp;

unsigned long int srcp = (long int) srcpp;

/* Copy from the beginning to the end. */

/* If there not too few bytes to copy, use word copy. */

if (len >= OP_T_THRES)

{

/* Copy just a few bytes to make DSTP aligned. */

len -= (-dstp) % OPSIZ;

BYTE_COPY_FWD (dstp, srcp, (-dstp) % OPSIZ);

/* Copy whole pages from SRCP to DSTP by virtual address manipulation,

as much as possible. */

PAGE_COPY_FWD_MAYBE (dstp, srcp, len, len);

/* Copy from SRCP to DSTP taking advantage of the known alignment of

DSTP. Number of bytes remaining is put in the third argument,

i.e. in LEN. This number may vary from machine to machine. */

WORD_COPY_FWD (dstp, srcp, len, len);

/* Fall out and copy the tail. */

}

/* There are just a few bytes to copy. Use byte memory operations. */

BYTE_COPY_FWD (dstp, srcp, len);

return dstpp;

}

libc_hidden_builtin_def (MEMCPY)The included sysdeps/generic/memcopy.h file contains the definitions of the macro used by memcpy and an explanation of its internals:

/* The strategy of the memory functions is:

1. Copy bytes until the destination pointer is aligned.

2. Copy words in unrolled loops. If the source and destination

are not aligned in the same way, use word memory operations,

but shift and merge two read words before writing.

3. Copy the few remaining bytes.

This is fast on processors that have at least 10 registers for

allocation by GCC, and that can access memory at reg+const in one

instruction.

I made an "exhaustive" test of this memmove when I wrote it,

exhaustive in the sense that I tried all alignment and length

combinations, with and without overlap. */For i386 (ie x86_32) architectures, the sysdeps/i386/memcopy.h macro definitions will replace the generic ones defining the WORD_COPY_FWD and BYTE_COPY_FWD macros in terms of string instructions:

#undef BYTE_COPY_FWD

#define BYTE_COPY_FWD(dst_bp, src_bp, nbytes) \

do { \

int __d0; \

asm volatile(/* Clear the direction flag, so copying goes forward. */ \

"cld\n" \

/* Copy bytes. */ \

"rep\n" \

"movsb" : \

"=D" (dst_bp), "=S" (src_bp), "=c" (__d0) : \

"0" (dst_bp), "1" (src_bp), "2" (nbytes) : \

"memory"); \

} while (0)

#undef WORD_COPY_FWD

#define WORD_COPY_FWD(dst_bp, src_bp, nbytes_left, nbytes) \

do \

{ \

int __d0; \

asm volatile(/* Clear the direction flag, so copying goes forward. */ \

"cld\n" \

/* Copy longwords. */ \

"rep\n" \

"movsl" : \

"=D" (dst_bp), "=S" (src_bp), "=c" (__d0) : \

"0" (dst_bp), "1" (src_bp), "2" ((nbytes) / 4) : \

"memory"); \

(nbytes_left) = (nbytes) % 4; \

} while (0)For x86_64, the implementation is much more intricate as it requires choosing a memcpy implementation according to the vector extensions available. The sysdeps/x86_64/multiarch/memcpy.c file includes the ifunc-memmove.h, which defines an IFUNC_SELECTOR function that returns a pointer to a function according to the characteristics of the CPU running the program:

sysdeps/x86_64/multiarch/memcpy.c

/* Multiple versions of memcpy.

All versions must be listed in ifunc-impl-list.c.

Copyright (C) 2017-2024 Free Software Foundation, Inc.

This file is part of the GNU C Library.

The GNU C Library is free software; you can redistribute it and/or

modify it under the terms of the GNU Lesser General Public

License as published by the Free Software Foundation; either

version 2.1 of the License, or (at your option) any later version.

The GNU C Library is distributed in the hope that it will be useful,

but WITHOUT ANY WARRANTY; without even the implied warranty of

MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU

Lesser General Public License for more details.

You should have received a copy of the GNU Lesser General Public

License along with the GNU C Library; if not, see

<https://www.gnu.org/licenses/>. */

/* Define multiple versions only for the definition in libc. */

#if IS_IN (libc)

# define memcpy __redirect_memcpy

# include <string.h>

# undef memcpy

# define SYMBOL_NAME memcpy

# include "ifunc-memmove.h"

libc_ifunc_redirected (__redirect_memcpy, __new_memcpy,

IFUNC_SELECTOR ());

# ifdef SHARED

__hidden_ver1 (__new_memcpy, __GI_memcpy, __redirect_memcpy)

__attribute__ ((visibility ("hidden")));

# endif

# include <shlib-compat.h>

versioned_symbol (libc, __new_memcpy, memcpy, GLIBC_2_14);

#endifsysdeps/x86_64/multiarch/ifunc-memmove.h

/* Common definition for memcpy, mempcpy and memmove implementation.

All versions must be listed in ifunc-impl-list.c.

Copyright (C) 2017-2024 Free Software Foundation, Inc.

This file is part of the GNU C Library.

The GNU C Library is free software; you can redistribute it and/or

modify it under the terms of the GNU Lesser General Public

License as published by the Free Software Foundation; either

version 2.1 of the License, or (at your option) any later version.

The GNU C Library is distributed in the hope that it will be useful,

but WITHOUT ANY WARRANTY; without even the implied warranty of

MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU

Lesser General Public License for more details.

You should have received a copy of the GNU Lesser General Public

License along with the GNU C Library; if not, see

<https://www.gnu.org/licenses/>. */

#include <init-arch.h>

extern __typeof (REDIRECT_NAME) OPTIMIZE (erms) attribute_hidden;

extern __typeof (REDIRECT_NAME) OPTIMIZE (avx512_unaligned)

attribute_hidden;

extern __typeof (REDIRECT_NAME) OPTIMIZE (avx512_unaligned_erms)

attribute_hidden;

extern __typeof (REDIRECT_NAME) OPTIMIZE (avx512_no_vzeroupper)

attribute_hidden;

extern __typeof (REDIRECT_NAME) OPTIMIZE (evex_unaligned)

attribute_hidden;

extern __typeof (REDIRECT_NAME) OPTIMIZE (evex_unaligned_erms)

attribute_hidden;

extern __typeof (REDIRECT_NAME) OPTIMIZE (avx_unaligned) attribute_hidden;

extern __typeof (REDIRECT_NAME) OPTIMIZE (avx_unaligned_erms)

attribute_hidden;

extern __typeof (REDIRECT_NAME) OPTIMIZE (avx_unaligned_rtm)

attribute_hidden;

extern __typeof (REDIRECT_NAME) OPTIMIZE (avx_unaligned_erms_rtm)

attribute_hidden;

extern __typeof (REDIRECT_NAME) OPTIMIZE (ssse3) attribute_hidden;

extern __typeof (REDIRECT_NAME) OPTIMIZE (sse2_unaligned)

attribute_hidden;

extern __typeof (REDIRECT_NAME) OPTIMIZE (sse2_unaligned_erms)

attribute_hidden;

static inline void *

IFUNC_SELECTOR (void)

{

const struct cpu_features *cpu_features = __get_cpu_features ();

if (CPU_FEATURES_ARCH_P (cpu_features, Prefer_ERMS)

|| CPU_FEATURES_ARCH_P (cpu_features, Prefer_FSRM))

return OPTIMIZE (erms);

if (X86_ISA_CPU_FEATURE_USABLE_P (cpu_features, AVX512F)

&& !CPU_FEATURES_ARCH_P (cpu_features, Prefer_No_AVX512))

{

if (X86_ISA_CPU_FEATURE_USABLE_P (cpu_features, AVX512VL))

{

if (CPU_FEATURE_USABLE_P (cpu_features, ERMS))

return OPTIMIZE (avx512_unaligned_erms);

return OPTIMIZE (avx512_unaligned);

}

return OPTIMIZE (avx512_no_vzeroupper);

}

if (X86_ISA_CPU_FEATURES_ARCH_P (cpu_features,

AVX_Fast_Unaligned_Load, ))

{

if (X86_ISA_CPU_FEATURE_USABLE_P (cpu_features, AVX512VL))

{

if (CPU_FEATURE_USABLE_P (cpu_features, ERMS))

return OPTIMIZE (evex_unaligned_erms);

return OPTIMIZE (evex_unaligned);

}

if (CPU_FEATURE_USABLE_P (cpu_features, RTM))

{

if (CPU_FEATURE_USABLE_P (cpu_features, ERMS))

return OPTIMIZE (avx_unaligned_erms_rtm);

return OPTIMIZE (avx_unaligned_rtm);

}

if (X86_ISA_CPU_FEATURES_ARCH_P (cpu_features,

Prefer_No_VZEROUPPER, !))

{

if (CPU_FEATURE_USABLE_P (cpu_features, ERMS))

return OPTIMIZE (avx_unaligned_erms);

return OPTIMIZE (avx_unaligned);

}

}

if (X86_ISA_CPU_FEATURE_USABLE_P (cpu_features, SSSE3)

/* Leave this as runtime check. The SSSE3 is optimized almost

exclusively for avoiding unaligned memory access during the

copy and by and large is not better than the sse2

implementation as a general purpose memmove. */

&& !CPU_FEATURES_ARCH_P (cpu_features, Fast_Unaligned_Copy))

{

return OPTIMIZE (ssse3);

}

if (CPU_FEATURE_USABLE_P (cpu_features, ERMS))

return OPTIMIZE (sse2_unaligned_erms);

return OPTIMIZE (sse2_unaligned);

}You can learn more about indirect functions in glibc in this blog post.

What you need to understand here is that the glibc will be able to determine at runtime what function should be called when calling memcpy based on your hardware capabilities.

Let’s use gdb to find out how our call to memcpy will be resolved at runtime.

Run the following commands in gdb to run until the first call to memcpy:

b main

run

b memcpy

c

disassembleYou should get something like this:

<+0>: endbr64

<+4>: mov rcx,QWORD PTR [rip+0x13b0f5] # 0x7ffff7fa0eb0

<+11>: lea rax,[rip+0x769e] # 0x7ffff7e6d460 <__memmove_erms>

<+18>: mov edx,DWORD PTR [rcx+0x1c4]

<+24>: test dh,0x90

<+27>: jne 0x7ffff7e65e41 <memcpy@@GLIBC_2.14+145>

<+29>: mov esi,DWORD PTR [rcx+0xb8]

<+35>: test esi,0x10000

<+41>: jne 0x7ffff7e65e48 <memcpy@@GLIBC_2.14+152>

<+43>: test dh,0x2

<+46>: je 0x7ffff7e65e20 <memcpy@@GLIBC_2.14+112>

<+48>: test esi,esi

<+50>: js 0x7ffff7e65e88 <memcpy@@GLIBC_2.14+216>

<+56>: test esi,0x800

<+62>: je 0x7ffff7e65e10 <memcpy@@GLIBC_2.14+96>

<+64>: and esi,0x200

<+70>: lea rdx,[rip+0xc9d43] # 0x7ffff7f2fb40 <__memmove_avx_unaligned_erms_rtm>

<+77>: lea rax,[rip+0xc9cac] # 0x7ffff7f2fab0 <__memmove_avx_unaligned_rtm>

<+84>: cmovne rax,rdx

<+88>: ret

<+89>: nop DWORD PTR [rax+0x0]

<+96>: test dh,0x8

<+99>: je 0x7ffff7e65ea8 <memcpy@@GLIBC_2.14+248>

<+105>: nop DWORD PTR [rax+0x0]

<+112>: test BYTE PTR [rcx+0x9d],0x2

<+119>: jne 0x7ffff7e65e78 <memcpy@@GLIBC_2.14+200>

<+121>: and esi,0x200

<+127>: lea rax,[rip+0x774a] # 0x7ffff7e6d580 <__memmove_sse2_unaligned_erms>

<+134>: lea rdx,[rip+0x76b3] # 0x7ffff7e6d4f0 <memcpy@GLIBC_2.2.5>

<+141>: cmove rax,rdx

<+145>: ret

<+146>: nop WORD PTR [rax+rax*1+0x0]

<+152>: test dh,0x20

<+155>: jne 0x7ffff7e65ddb <memcpy@@GLIBC_2.14+43>

<+157>: lea rax,[rip+0xdc23c] # 0x7ffff7f42090 <__memmove_avx512_no_vzeroupper>

<+164>: test esi,esi

<+166>: jns 0x7ffff7e65e41 <memcpy@@GLIBC_2.14+145>

<+168>: and esi,0x200

<+174>: lea rdx,[rip+0xda19b] # 0x7ffff7f40000 <__memmove_avx512_unaligned_erms>

<+181>: lea rax,[rip+0xda104] # 0x7ffff7f3ff70 <__memmove_avx512_unaligned>

<+188>: cmovne rax,rdx

<+192>: ret

<+193>: nop DWORD PTR [rax+0x0]

<+200>: and edx,0x20

<+203>: lea rax,[rip+0xdcc3e] # 0x7ffff7f42ac0 <__memmove_ssse3>

<+210>: jne 0x7ffff7e65e29 <memcpy@@GLIBC_2.14+121>

<+212>: ret

<+213>: nop DWORD PTR [rax]

<+216>: and esi,0x200

<+222>: lea rdx,[rip+0xd112b] # 0x7ffff7f36fc0 <__memmove_evex_unaligned_erms>

<+229>: lea rax,[rip+0xd1094] # 0x7ffff7f36f30 <__memmove_evex_unaligned>

<+236>: cmovne rax,rdx

<+240>: ret

<+241>: nop DWORD PTR [rax+0x0]

<+248>: and esi,0x200

<+254>: lea rdx,[rip+0xc124b] # 0x7ffff7f27100 <__memmove_avx_unaligned_erms>

<+261>: lea rax,[rip+0xc11b4] # 0x7ffff7f27070 <__memmove_avx_unaligned>

<+268>: cmovne rax,rdx

<+272>: retInstead of breaking inside the code of the memcpy function, the glibc runtime called the IFUNC_SELECTOR.

This function will return the pointer that will be used for later calls of the memcpy function.

Let’s now hit the finish gdb command to see what the selector returns in rax.

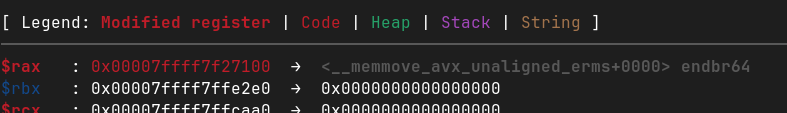

Return value of IFUNC_SELECTOR

The IFUNC_SELECTOR returned a pointer to __memmove_avx_unaligned_erms. This function is defined in sysdeps/x86_64/multiarch/memmove-avx-unaligned-erms.S and sysdeps/x86_64/multiarch/memmove-vec-unaligned-erms.S with handwritten assembly.

But it’s quite difficult to figure out the execution flow while reading this, so let’s put a breakpoint on this symbol, hit continue, and run this function step by step.

The “erms” parts of __memmove_avx_unaligned_erms stands for “Enhanced Rep Movsb/Stosb” which is what we called earlier fast-string operation.

It is named this way in the “Intel® 64 and IA-32 Architectures Optimization Reference Manual: Volume 1” section 3.7.6 and corresponds to a byte in cpuid which indicates that this feature is available.

I highlighted the code reached during the execution of the function:

__memmove_avx_unaligned_erms

<+0>: endbr64

<+4>: mov rax,rdi ; Copy the pointer to destination into rax

<+7>: cmp rdx,0x20 ; Compare size to 0x20 (32 bytes)

<+11>: jb 0x7ffff7f27130 <__memmove_avx_unaligned_erms+48> ; If smaller jump

<+13>: vmovdqu ymm0,YMMWORD PTR [rsi] ; Otherwise loads the first 32 bytes of src into ymm0

<+17>: cmp rdx,0x40 ; Compare size to 0x40 (64 bytes)

<+21>: ja 0x7ffff7f271c0 <__memmove_avx_unaligned_erms+192> ; If above jump

<+27>: vmovdqu ymm1,YMMWORD PTR [rsi+rdx*1-0x20]

<+33>: vmovdqu YMMWORD PTR [rdi],ymm0

<+37>: vmovdqu YMMWORD PTR [rdi+rdx*1-0x20],ymm1

<+43>: vzeroupper

<+46>: ret

<+47>: nop

<+48>: cmp edx,0x10

<+51>: jae 0x7ffff7f27162 <__memmove_avx_unaligned_erms+98>

<+53>: cmp edx,0x8

<+56>: jae 0x7ffff7f27180 <__memmove_avx_unaligned_erms+128>

<+58>: cmp edx,0x4

<+61>: jae 0x7ffff7f27155 <__memmove_avx_unaligned_erms+85>

<+63>: cmp edx,0x1

<+66>: jl 0x7ffff7f27154 <__memmove_avx_unaligned_erms+84>

<+68>: mov cl,BYTE PTR [rsi]

<+70>: je 0x7ffff7f27152 <__memmove_avx_unaligned_erms+82>

<+72>: movzx esi,WORD PTR [rsi+rdx*1-0x2]

<+77>: mov WORD PTR [rdi+rdx*1-0x2],si

<+82>: mov BYTE PTR [rdi],cl

<+84>: ret

<+85>: mov ecx,DWORD PTR [rsi+rdx*1-0x4]

<+89>: mov esi,DWORD PTR [rsi]

<+91>: mov DWORD PTR [rdi+rdx*1-0x4],ecx

<+95>: mov DWORD PTR [rdi],esi

<+97>: ret

<+98>: vmovdqu xmm0,XMMWORD PTR [rsi]

<+102>: vmovdqu xmm1,XMMWORD PTR [rsi+rdx*1-0x10]

<+108>: vmovdqu XMMWORD PTR [rdi],xmm0

<+112>: vmovdqu XMMWORD PTR [rdi+rdx*1-0x10],xmm1

<+118>: ret

<+119>: nop WORD PTR [rax+rax*1+0x0]

<+128>: mov rcx,QWORD PTR [rsi+rdx*1-0x8]

<+133>: mov rsi,QWORD PTR [rsi]

<+136>: mov QWORD PTR [rdi],rsi

<+139>: mov QWORD PTR [rdi+rdx*1-0x8],rcx

<+144>: ret

<+145>: vmovdqu ymm2,YMMWORD PTR [rsi+rdx*1-0x20]

<+151>: vmovdqu ymm3,YMMWORD PTR [rsi+rdx*1-0x40]

<+157>: vmovdqu YMMWORD PTR [rdi],ymm0

<+161>: vmovdqu YMMWORD PTR [rdi+0x20],ymm1

<+166>: vmovdqu YMMWORD PTR [rdi+rdx*1-0x20],ymm2

<+172>: vmovdqu YMMWORD PTR [rdi+rdx*1-0x40],ymm3

<+178>: vzeroupper

<+181>: ret

<+182>: cs nop WORD PTR [rax+rax*1+0x0]

<+192>: cmp rdx,QWORD PTR [rip+0x7a051] # 0x7ffff7fa1218 <__x86_rep_movsb_threshold>

<+199>: ja 0x7ffff7f273c0 <__memmove_avx_unaligned_erms+704> ; Jump if size > __x86_rep_movsb_threshold

<+205>: cmp rdx,0x100

<+212>: ja 0x7ffff7f27235 <__memmove_avx_unaligned_erms+309>

<+214>: vmovdqu ymm1,YMMWORD PTR [rsi+0x20]

<+219>: cmp rdx,0x80

<+226>: jbe 0x7ffff7f27191 <__memmove_avx_unaligned_erms+145>

<+228>: vmovdqu ymm2,YMMWORD PTR [rsi+0x40]

<+233>: vmovdqu ymm3,YMMWORD PTR [rsi+0x60]

<+238>: vmovdqu ymm4,YMMWORD PTR [rsi+rdx*1-0x20]

<+244>: vmovdqu ymm5,YMMWORD PTR [rsi+rdx*1-0x40]

<+250>: vmovdqu ymm6,YMMWORD PTR [rsi+rdx*1-0x60]

<+256>: vmovdqu ymm7,YMMWORD PTR [rsi+rdx*1-0x80]

<+262>: vmovdqu YMMWORD PTR [rdi],ymm0

<+266>: vmovdqu YMMWORD PTR [rdi+0x20],ymm1

<+271>: vmovdqu YMMWORD PTR [rdi+0x40],ymm2

<+276>: vmovdqu YMMWORD PTR [rdi+0x60],ymm3

<+281>: vmovdqu YMMWORD PTR [rdi+rdx*1-0x20],ymm4

<+287>: vmovdqu YMMWORD PTR [rdi+rdx*1-0x40],ymm5

<+293>: vmovdqu YMMWORD PTR [rdi+rdx*1-0x60],ymm6

<+299>: vmovdqu YMMWORD PTR [rdi+rdx*1-0x80],ymm7

<+305>: vzeroupper

<+308>: ret

<+309>: mov rcx,rdi

<+312>: sub rcx,rsi

<+315>: cmp rcx,rdx

<+318>: jb 0x7ffff7f272f0 <__memmove_avx_unaligned_erms+496>

<+324>: cmp rdx,QWORD PTR [rip+0x80fe5] # 0x7ffff7fa8230 <__x86_shared_non_temporal_threshold>

<+331>: ja 0x7ffff7f27420 <__memmove_avx_unaligned_erms+800>

<+337>: lea r8,[rcx+rdx*1]

<+341>: xor r8,rcx

<+344>: shr r8,0x3f

<+348>: and ecx,0xf00

<+354>: add ecx,r8d

<+357>: je 0x7ffff7f272f5 <__memmove_avx_unaligned_erms+501>

<+363>: vmovdqu ymm5,YMMWORD PTR [rsi+rdx*1-0x20]

<+369>: vmovdqu ymm6,YMMWORD PTR [rsi+rdx*1-0x40]

<+375>: mov rcx,rdi

<+378>: or rdi,0x1f

<+382>: vmovdqu ymm7,YMMWORD PTR [rsi+rdx*1-0x60]

<+388>: vmovdqu ymm8,YMMWORD PTR [rsi+rdx*1-0x80]

<+394>: sub rsi,rcx

<+397>: inc rdi

<+400>: add rsi,rdi

<+403>: lea rdx,[rcx+rdx*1-0x80]

<+408>: nop DWORD PTR [rax+rax*1+0x0]

<+416>: vmovdqu ymm1,YMMWORD PTR [rsi]

<+420>: vmovdqu ymm2,YMMWORD PTR [rsi+0x20]

<+425>: vmovdqu ymm3,YMMWORD PTR [rsi+0x40]

<+430>: vmovdqu ymm4,YMMWORD PTR [rsi+0x60]

<+435>: sub rsi,0xffffffffffffff80

<+439>: vmovdqa YMMWORD PTR [rdi],ymm1

<+443>: vmovdqa YMMWORD PTR [rdi+0x20],ymm2

<+448>: vmovdqa YMMWORD PTR [rdi+0x40],ymm3

<+453>: vmovdqa YMMWORD PTR [rdi+0x60],ymm4

<+458>: sub rdi,0xffffffffffffff80

<+462>: cmp rdx,rdi

<+465>: ja 0x7ffff7f272a0 <__memmove_avx_unaligned_erms+416>

<+467>: vmovdqu YMMWORD PTR [rdx+0x60],ymm5

<+472>: vmovdqu YMMWORD PTR [rdx+0x40],ymm6

<+477>: vmovdqu YMMWORD PTR [rdx+0x20],ymm7

<+482>: vmovdqu YMMWORD PTR [rdx],ymm8

<+486>: vmovdqu YMMWORD PTR [rcx],ymm0

<+490>: vzeroupper

<+493>: ret

<+494>: xchg ax,ax

<+496>: test rcx,rcx

<+499>: je 0x7ffff7f272ea <__memmove_avx_unaligned_erms+490>

<+501>: vmovdqu ymm5,YMMWORD PTR [rsi+0x20]

<+506>: vmovdqu ymm6,YMMWORD PTR [rsi+0x40]

<+511>: lea rcx,[rdi+rdx*1-0x81]

<+519>: vmovdqu ymm7,YMMWORD PTR [rsi+0x60]

<+524>: vmovdqu ymm8,YMMWORD PTR [rsi+rdx*1-0x20]

<+530>: sub rsi,rdi

<+533>: and rcx,0xffffffffffffffe0

<+537>: add rsi,rcx

<+540>: nop DWORD PTR [rax+0x0]

<+544>: vmovdqu ymm1,YMMWORD PTR [rsi+0x60]

<+549>: vmovdqu ymm2,YMMWORD PTR [rsi+0x40]

<+554>: vmovdqu ymm3,YMMWORD PTR [rsi+0x20]

<+559>: vmovdqu ymm4,YMMWORD PTR [rsi]

<+563>: add rsi,0xffffffffffffff80

<+567>: vmovdqa YMMWORD PTR [rcx+0x60],ymm1

<+572>: vmovdqa YMMWORD PTR [rcx+0x40],ymm2

<+577>: vmovdqa YMMWORD PTR [rcx+0x20],ymm3

<+582>: vmovdqa YMMWORD PTR [rcx],ymm4

<+586>: add rcx,0xffffffffffffff80

<+590>: cmp rdi,rcx

<+593>: jb 0x7ffff7f27320 <__memmove_avx_unaligned_erms+544>

<+595>: vmovdqu YMMWORD PTR [rdi],ymm0

<+599>: vmovdqu YMMWORD PTR [rdi+0x20],ymm5

<+604>: vmovdqu YMMWORD PTR [rdi+0x40],ymm6

<+609>: vmovdqu YMMWORD PTR [rdi+0x60],ymm7

<+614>: vmovdqu YMMWORD PTR [rdx+rdi*1-0x20],ymm8

<+620>: vzeroupper

<+623>: ret

<+624>: data16 cs nop WORD PTR [rax+rax*1+0x0]

<+635>: nop DWORD PTR [rax+rax*1+0x0]

<+640>: vmovdqu ymm1,YMMWORD PTR [rsi+0x20]

<+645>: test ecx,0xe00

<+651>: jne 0x7ffff7f273f2 <__memmove_avx_unaligned_erms+754>

<+653>: mov r9,rcx

<+656>: lea rcx,[rsi+rdx*1-0x1]

<+661>: or rsi,0x3f

<+665>: lea rdi,[rsi+r9*1+0x1]

<+670>: sub rcx,rsi

<+673>: inc rsi

<+676>: rep movs BYTE PTR es:[rdi],BYTE PTR ds:[rsi]

<+678>: vmovdqu YMMWORD PTR [r8],ymm0

<+683>: vmovdqu YMMWORD PTR [r8+0x20],ymm1

<+689>: vzeroupper

<+692>: ret

<+693>: data16 cs nop WORD PTR [rax+rax*1+0x0]

<+704>: mov rcx,rdi ; Copy pointer to dest in rcx

<+707>: sub rcx,rsi ; rcx = dest - src

<+710>: cmp rcx,rdx ; if (rcx < size)

<+713>: jb 0x7ffff7f272f0 <__memmove_avx_unaligned_erms+496> ; jump

<+719>: mov r8,rdi ; Copy pointer to dest in r8

<+722>: cmp rdx,QWORD PTR [rip+0x80e4f] # 0x7ffff7fa8228 <__x86_rep_movsb_stop_threshold>

<+729>: jae 0x7ffff7f27420 <__memmove_avx_unaligned_erms+800> ; if(size >= __x86_rep_movsb_stop_threshold) jump

<+731>: test BYTE PTR [rip+0x80e3e],0x1 # 0x7ffff7fa8220 <__x86_string_control>

<+738>: je 0x7ffff7f27380 <__memmove_avx_unaligned_erms+640> ; if(__x86_string_control & 0x1 == 0) jump

<+740>: cmp ecx,0xffffffc0 ; if(rcx > 0xffffffc0)

<+743>: ja 0x7ffff7f2726b <__memmove_avx_unaligned_erms+363> ; jump

<+749>: vmovdqu ymm1,YMMWORD PTR [rsi+0x20] ; Loads the first 32th-63th bytes of src into ymm1

<+754>: sub rsi,rdi ; rsi = src - dest

<+757>: add rdi,0x3f ; rdi = dest + 63 bytes

<+761>: lea rcx,[r8+rdx*1] ; rcx = dest + size

<+765>: and rdi,0xffffffffffffffc0 ; Align rdi on 64 bytes

<+769>: add rsi,rdi ; rsi = (src - dest + (dest{64 bytes aligned}))

<+772>: sub rcx,rdi ; rcx = dest + size - dest{64 bytes aligned}

<+775>: rep movs BYTE PTR es:[rdi],BYTE PTR ds:[rsi] ; OUR REP MOVSB INSTRUCTION !!!

<+777>: vmovdqu YMMWORD PTR [r8],ymm0 ; Copy ymm0 content to dest

<+782>: vmovdqu YMMWORD PTR [r8+0x20],ymm1 ; Copy ymm1 content to dest

<+788>: vzeroupper ; Reset for SSE2

<+791>: ret

<+792>: nop DWORD PTR [rax+rax*1+0x0]

<+800>: mov r11,QWORD PTR [rip+0x80e09] # 0x7ffff7fa8230 <__x86_shared_non_temporal_threshold>

<+807>: cmp rdx,r11

<+810>: jb 0x7ffff7f27251 <__memmove_avx_unaligned_erms+337>

<+816>: neg rcx

<+819>: cmp rdx,rcx

<+822>: ja 0x7ffff7f2726b <__memmove_avx_unaligned_erms+363>

<+828>: vmovdqu ymm1,YMMWORD PTR [rsi+0x20]

<+833>: vmovdqu YMMWORD PTR [rdi],ymm0

<+837>: vmovdqu YMMWORD PTR [rdi+0x20],ymm1

<+842>: mov r8,rdi

<+845>: and r8,0x3f

<+849>: sub r8,0x40

<+853>: sub rsi,r8

<+856>: sub rdi,r8

<+859>: add rdx,r8

<+862>: not ecx

<+864>: mov r10,rdx

<+867>: test ecx,0xf00

<+873>: je 0x7ffff7f275f0 <__memmove_avx_unaligned_erms+1264>

<+879>: shl r11,0x4

<+883>: cmp rdx,r11

<+886>: jae 0x7ffff7f275f0 <__memmove_avx_unaligned_erms+1264>

<+892>: and edx,0x1fff

<+898>: shr r10,0xd

<+902>: cs nop WORD PTR [rax+rax*1+0x0]

<+912>: mov ecx,0x20

<+917>: prefetcht0 BYTE PTR [rsi+0x80]

<+924>: prefetcht0 BYTE PTR [rsi+0xc0]

<+931>: prefetcht0 BYTE PTR [rsi+0x100]

<+938>: prefetcht0 BYTE PTR [rsi+0x140]

<+945>: prefetcht0 BYTE PTR [rsi+0x1080]

<+952>: prefetcht0 BYTE PTR [rsi+0x10c0]

<+959>: prefetcht0 BYTE PTR [rsi+0x1100]

<+966>: prefetcht0 BYTE PTR [rsi+0x1140]

<+973>: vmovdqu ymm0,YMMWORD PTR [rsi]

<+977>: vmovdqu ymm1,YMMWORD PTR [rsi+0x20]

<+982>: vmovdqu ymm2,YMMWORD PTR [rsi+0x40]

<+987>: vmovdqu ymm3,YMMWORD PTR [rsi+0x60]

<+992>: vmovdqu ymm4,YMMWORD PTR [rsi+0x1000]

<+1000>: vmovdqu ymm5,YMMWORD PTR [rsi+0x1020]

<+1008>: vmovdqu ymm6,YMMWORD PTR [rsi+0x1040]

<+1016>: vmovdqu ymm7,YMMWORD PTR [rsi+0x1060]

<+1024>: sub rsi,0xffffffffffffff80

<+1028>: vmovntdq YMMWORD PTR [rdi],ymm0

<+1032>: vmovntdq YMMWORD PTR [rdi+0x20],ymm1

<+1037>: vmovntdq YMMWORD PTR [rdi+0x40],ymm2

<+1042>: vmovntdq YMMWORD PTR [rdi+0x60],ymm3

<+1047>: vmovntdq YMMWORD PTR [rdi+0x1000],ymm4

<+1055>: vmovntdq YMMWORD PTR [rdi+0x1020],ymm5

<+1063>: vmovntdq YMMWORD PTR [rdi+0x1040],ymm6

<+1071>: vmovntdq YMMWORD PTR [rdi+0x1060],ymm7

<+1079>: sub rdi,0xffffffffffffff80

<+1083>: dec ecx

<+1085>: jne 0x7ffff7f27495 <__memmove_avx_unaligned_erms+917>

<+1091>: add rdi,0x1000

<+1098>: add rsi,0x1000

<+1105>: dec r10

<+1108>: jne 0x7ffff7f27490 <__memmove_avx_unaligned_erms+912>

<+1114>: sfence

<+1117>: cmp edx,0x80

<+1123>: jbe 0x7ffff7f275ba <__memmove_avx_unaligned_erms+1210>

<+1125>: prefetcht0 BYTE PTR [rsi+0x80]

<+1132>: prefetcht0 BYTE PTR [rsi+0xc0]

<+1139>: prefetcht0 BYTE PTR [rdi+0x80]

<+1146>: prefetcht0 BYTE PTR [rdi+0xc0]

<+1153>: vmovdqu ymm0,YMMWORD PTR [rsi]

<+1157>: vmovdqu ymm1,YMMWORD PTR [rsi+0x20]

<+1162>: vmovdqu ymm2,YMMWORD PTR [rsi+0x40]

<+1167>: vmovdqu ymm3,YMMWORD PTR [rsi+0x60]

<+1172>: sub rsi,0xffffffffffffff80

<+1176>: add edx,0xffffff80

<+1179>: vmovdqa YMMWORD PTR [rdi],ymm0

<+1183>: vmovdqa YMMWORD PTR [rdi+0x20],ymm1

<+1188>: vmovdqa YMMWORD PTR [rdi+0x40],ymm2

<+1193>: vmovdqa YMMWORD PTR [rdi+0x60],ymm3

<+1198>: sub rdi,0xffffffffffffff80

<+1202>: cmp edx,0x80

<+1208>: ja 0x7ffff7f27565 <__memmove_avx_unaligned_erms+1125>

<+1210>: vmovdqu ymm0,YMMWORD PTR [rsi+rdx*1-0x80]

<+1216>: vmovdqu ymm1,YMMWORD PTR [rsi+rdx*1-0x60]

<+1222>: vmovdqu ymm2,YMMWORD PTR [rsi+rdx*1-0x40]

<+1228>: vmovdqu ymm3,YMMWORD PTR [rsi+rdx*1-0x20]

<+1234>: vmovdqu YMMWORD PTR [rdi+rdx*1-0x80],ymm0

<+1240>: vmovdqu YMMWORD PTR [rdi+rdx*1-0x60],ymm1

<+1246>: vmovdqu YMMWORD PTR [rdi+rdx*1-0x40],ymm2

<+1252>: vmovdqu YMMWORD PTR [rdi+rdx*1-0x20],ymm3

<+1258>: vzeroupper

<+1261>: ret

<+1262>: xchg ax,ax

<+1264>: and edx,0x3fff

<+1270>: shr r10,0xe

<+1274>: nop WORD PTR [rax+rax*1+0x0]

<+1280>: mov ecx,0x20

<+1285>: prefetcht0 BYTE PTR [rsi+0x80]

<+1292>: prefetcht0 BYTE PTR [rsi+0xc0]

<+1299>: prefetcht0 BYTE PTR [rsi+0x1080]

<+1306>: prefetcht0 BYTE PTR [rsi+0x10c0]

<+1313>: prefetcht0 BYTE PTR [rsi+0x2080]

<+1320>: prefetcht0 BYTE PTR [rsi+0x20c0]

<+1327>: prefetcht0 BYTE PTR [rsi+0x3080]

<+1334>: prefetcht0 BYTE PTR [rsi+0x30c0]

<+1341>: vmovdqu ymm0,YMMWORD PTR [rsi]

<+1345>: vmovdqu ymm1,YMMWORD PTR [rsi+0x20]

<+1350>: vmovdqu ymm2,YMMWORD PTR [rsi+0x40]

<+1355>: vmovdqu ymm3,YMMWORD PTR [rsi+0x60]

<+1360>: vmovdqu ymm4,YMMWORD PTR [rsi+0x1000]

<+1368>: vmovdqu ymm5,YMMWORD PTR [rsi+0x1020]

<+1376>: vmovdqu ymm6,YMMWORD PTR [rsi+0x1040]

<+1384>: vmovdqu ymm7,YMMWORD PTR [rsi+0x1060]

<+1392>: vmovdqu ymm8,YMMWORD PTR [rsi+0x2000]

<+1400>: vmovdqu ymm9,YMMWORD PTR [rsi+0x2020]

<+1408>: vmovdqu ymm10,YMMWORD PTR [rsi+0x2040]

<+1416>: vmovdqu ymm11,YMMWORD PTR [rsi+0x2060]

<+1424>: vmovdqu ymm12,YMMWORD PTR [rsi+0x3000]

<+1432>: vmovdqu ymm13,YMMWORD PTR [rsi+0x3020]

<+1440>: vmovdqu ymm14,YMMWORD PTR [rsi+0x3040]

<+1448>: vmovdqu ymm15,YMMWORD PTR [rsi+0x3060]

<+1456>: sub rsi,0xffffffffffffff80

<+1460>: vmovntdq YMMWORD PTR [rdi],ymm0

<+1464>: vmovntdq YMMWORD PTR [rdi+0x20],ymm1

<+1469>: vmovntdq YMMWORD PTR [rdi+0x40],ymm2

<+1474>: vmovntdq YMMWORD PTR [rdi+0x60],ymm3

<+1479>: vmovntdq YMMWORD PTR [rdi+0x1000],ymm4

<+1487>: vmovntdq YMMWORD PTR [rdi+0x1020],ymm5

<+1495>: vmovntdq YMMWORD PTR [rdi+0x1040],ymm6

<+1503>: vmovntdq YMMWORD PTR [rdi+0x1060],ymm7

<+1511>: vmovntdq YMMWORD PTR [rdi+0x2000],ymm8

<+1519>: vmovntdq YMMWORD PTR [rdi+0x2020],ymm9

<+1527>: vmovntdq YMMWORD PTR [rdi+0x2040],ymm10

<+1535>: vmovntdq YMMWORD PTR [rdi+0x2060],ymm11

<+1543>: vmovntdq YMMWORD PTR [rdi+0x3000],ymm12

<+1551>: vmovntdq YMMWORD PTR [rdi+0x3020],ymm13

<+1559>: vmovntdq YMMWORD PTR [rdi+0x3040],ymm14

<+1567>: vmovntdq YMMWORD PTR [rdi+0x3060],ymm15

<+1575>: sub rdi,0xffffffffffffff80

<+1579>: dec ecx

<+1581>: jne 0x7ffff7f27605 <__memmove_avx_unaligned_erms+1285>

<+1587>: add rdi,0x3000

<+1594>: add rsi,0x3000

<+1601>: dec r10

<+1604>: jne 0x7ffff7f27600 <__memmove_avx_unaligned_erms+1280>

<+1610>: sfence

<+1613>: cmp edx,0x80

<+1619>: jbe 0x7ffff7f277aa <__memmove_avx_unaligned_erms+1706>

<+1621>: prefetcht0 BYTE PTR [rsi+0x80]

<+1628>: prefetcht0 BYTE PTR [rsi+0xc0]

<+1635>: prefetcht0 BYTE PTR [rdi+0x80]

<+1642>: prefetcht0 BYTE PTR [rdi+0xc0]

<+1649>: vmovdqu ymm0,YMMWORD PTR [rsi]

<+1653>: vmovdqu ymm1,YMMWORD PTR [rsi+0x20]

<+1658>: vmovdqu ymm2,YMMWORD PTR [rsi+0x40]

<+1663>: vmovdqu ymm3,YMMWORD PTR [rsi+0x60]

<+1668>: sub rsi,0xffffffffffffff80

<+1672>: add edx,0xffffff80

<+1675>: vmovdqa YMMWORD PTR [rdi],ymm0

<+1679>: vmovdqa YMMWORD PTR [rdi+0x20],ymm1

<+1684>: vmovdqa YMMWORD PTR [rdi+0x40],ymm2

<+1689>: vmovdqa YMMWORD PTR [rdi+0x60],ymm3

<+1694>: sub rdi,0xffffffffffffff80

<+1698>: cmp edx,0x80

<+1704>: ja 0x7ffff7f27755 <__memmove_avx_unaligned_erms+1621>

<+1706>: vmovdqu ymm0,YMMWORD PTR [rsi+rdx*1-0x80]

<+1712>: vmovdqu ymm1,YMMWORD PTR [rsi+rdx*1-0x60]

<+1718>: vmovdqu ymm2,YMMWORD PTR [rsi+rdx*1-0x40]

<+1724>: vmovdqu ymm3,YMMWORD PTR [rsi+rdx*1-0x20]

<+1730>: vmovdqu YMMWORD PTR [rdi+rdx*1-0x80],ymm0

<+1736>: vmovdqu YMMWORD PTR [rdi+rdx*1-0x60],ymm1

<+1742>: vmovdqu YMMWORD PTR [rdi+rdx*1-0x40],ymm2

<+1748>: vmovdqu YMMWORD PTR [rdi+rdx*1-0x20],ymm3

<+1754>: vzeroupper

<+1757>: retRemember: We are copying 4Mib of data, so rdx = 0x400000.

The __memmove_avx_unaligned_erms performs several tests over the rdx register, which contains the size of our buffer (3rd argument for System V ABI).

Here is each test performed and the result of the conditional jump:

| Condition | Taken ? |

|---|---|

| size is greater than 32 bytes | ✅ |

| size is greater than 64 bytes | ✅ |

size is greater than __x86_rep_movsb_threshold | ✅ |

| dest doesn’t start in src | ✅ |

size is greater than __x86_rep_movsb_stop_threshold | ❌ |

LSB(__x86_string_control) == 0 | ❌ |

dest - src is greater than 2^32-64 | ❌ |

✅ = taken ❌ = not taken

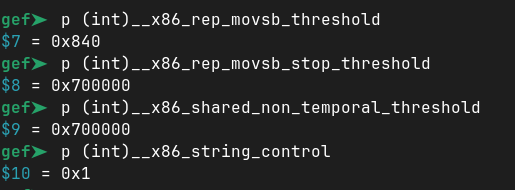

We can check the value of the thresholds and of the “string control” constant.

Value of the comparison constants in gdb

And after aligning rsi and rdi on 64 bytes, we call our beloved rep movsb. 😄

But note that this is very dependent on our memory layout:

- with size >= 0x700000,

memcpywould copy the buffer in blocks of 2^14 bytes using all the ymm registers and prefetching, then terminate to copy by blocks of 128 bytes until the end. - with 32 < size < 64, it would be a simple 64-byte copy using ymm0 and ymm1

- etc…

We can find an explanation for the __x86_rep_movsb_threshold value in this commit message:

In the process of optimizing memcpy for AMD machines, we have found the vector move operations are outperforming enhanced REP MOVSB for data transfers above the L2 cache size on Zen3 architectures. To handle this use case, we are adding an upper bound parameter on enhanced REP MOVSB:’__x86_rep_movsb_stop_threshold'.

As per large-bench results, we are configuring this parameter to the L2 cache size for AMD machines and applicable from Zen3 architecture supporting the ERMS feature. For architectures other than AMD, it is the computed value of non-temporal threshold parameter.

Note that since this commit sysdeps/x86/dl-cacheinfo.h has been changed and __x86_rep_movsb_stop_threshold is equal to __x86_shared_non_temporal_threshold for all x86 architectures nowadays.

You can read all the details of the choices made for the __x86_shared_non_temporal_threshold tunable in sysdeps/x86/dl-cacheinfo.h:

/* The default setting for the non_temporal threshold is [1/8, 1/2] of size

of the chip's cache (depending on `cachesize_non_temporal_divisor` which

is microarch specific. The default is 1/4). For most Intel processors

with an initial release date between 2017 and 2023, a thread's

typical share of the cache is from 18-64MB. Using a reasonable size

fraction of L3 is meant to estimate the point where non-temporal stores

begin out-competing REP MOVSB. As well the point where the fact that

non-temporal stores are forced back to main memory would already occurred

to the majority of the lines in the copy. Note, concerns about the entire

L3 cache being evicted by the copy are mostly alleviated by the fact that

modern HW detects streaming patterns and provides proper LRU hints so that

the maximum thrashing capped at 1/associativity. */

unsigned long int non_temporal_threshold

= shared / cachesize_non_temporal_divisor;

/* If the computed non_temporal_threshold <= 3/4 * per-thread L3, we most

likely have incorrect/incomplete cache info in which case, default to

3/4 * per-thread L3 to avoid regressions. */

unsigned long int non_temporal_threshold_lowbound

= shared_per_thread * 3 / 4;

if (non_temporal_threshold < non_temporal_threshold_lowbound)

non_temporal_threshold = non_temporal_threshold_lowbound;

/* If no ERMS, we use the per-thread L3 chunking. Normal cacheable stores run

a higher risk of actually thrashing the cache as they don't have a HW LRU

hint. As well, their performance in highly parallel situations is

noticeably worse. Zhaoxin processors are an exception, the lowbound is not

suitable for them based on actual test data. */

if (!CPU_FEATURE_USABLE_P (cpu_features, ERMS)

&& cpu_features->basic.kind != arch_kind_zhaoxin)

non_temporal_threshold = non_temporal_threshold_lowbound;

/* SIZE_MAX >> 4 because memmove-vec-unaligned-erms right-shifts the value of

'x86_non_temporal_threshold' by `LOG_4X_MEMCPY_THRESH` (4) and it is best

if that operation cannot overflow. Minimum of 0x4040 (16448) because the

L(large_memset_4x) loops need 64-byte to cache align and enough space for

at least 1 iteration of 4x PAGE_SIZE unrolled loop. Both values are

reflected in the manual. */

unsigned long int maximum_non_temporal_threshold = SIZE_MAX >> 4;

unsigned long int minimum_non_temporal_threshold = 0x4040;

/* If `non_temporal_threshold` less than `minimum_non_temporal_threshold`

it most likely means we failed to detect the cache info. We don't want

to default to `minimum_non_temporal_threshold` as such a small value,

while correct, has bad performance. We default to 64MB as reasonable

default bound. 64MB is likely conservative in that most/all systems would

choose a lower value so it should never forcing non-temporal stores when

they otherwise wouldn't be used. */

if (non_temporal_threshold < minimum_non_temporal_threshold)

non_temporal_threshold = 64 * 1024 * 1024;

else if (non_temporal_threshold > maximum_non_temporal_threshold)

non_temporal_threshold = maximum_non_temporal_threshold;When the block to copy is greater than __x86_shared_non_temporal_threshold, the __memmove_avx_unaligned_erms implementation uses an unrolled loop of AVX2 registers with prefetching and non-temporal stores using the vmovntdq (vex mov non-temporal double quadword).

The “Intel® 64 and IA-32 Architectures Optimization Reference Manual: Volume 1” section 9.6.1 gives hints on when to use non-temporal stores:

Use non-temporal stores in the cases when the data to be stored is:

- Write-once (non-temporal).

- Too large and thus cause cache thrashing.

Non-temporal stores do not invoke a cache line allocation, which means they are not write-allocate. As a result, caches are not polluted and no dirty writeback is generated to compete with useful data bandwidth. Without using non-temporal stores, bus bandwidth will suffer when caches start to be thrashed because of dirty writebacks.

The memcpy function of the glibc is a piece of code tailored to be the most efficient and adaptable to every hardware and memory layout possible.

Benchmark 2: memset

As you can see with godbolt, gcc will inline a call to memset using the rep stosq instruction under certain circumstances.

This is already a good hint about the efficiency of this instruction.

#include <string.h>

#define BUF_LEN (1 << 13)

char a[BUF_LEN];

int value;

int main(void) {

memset(a, value, BUF_LEN);

return 0;

}gcc -O1 -g -o memset memset.c; int main(void) {

; memset(a, value, BUF_LEN);

401106: mov edi,0x404060

40110b: movzx eax,BYTE PTR [rip+0x2f2e] # 404040 <value>

401112: movabs rdx,0x101010101010101

40111c: imul rax,rdx

401120: mov ecx,0x400

401125: rep stos QWORD PTR es:[rdi],rax

; return 0;

; }

401128: mov eax,0x0

40112d: retTo broadcast the first byte of value in all bytes of the rax register, the compiler puts 0x1010101010101010 in rdx and multiplies rax and rdx.

I wrote a macro to reproduce this:

; void *memset_movb(rdi: void s[.n], rsi: int c, rdx: size_t n);

memset_movb:

test rdx, rdx ; if(rdx == 0)

jz .exit ; goto .exit

xor ecx, ecx ; rcx = 0

.loop:

mov [rdi + rcx], sil ; s[rcx] = sil

add rcx, 1 ; rcx++

sub rdx, 1 ; rdx--

jnz .loop ; if(rdx != 0) goto .loop

.exit:

mov rax, rdi ; return s

ret

%macro setup_memset_rax 0

mov rcx, rdx ; rcx = n

movzx eax, sil ; Mask the first byte of esi

mov rdx, 0x0101010101010101 ; Load the multiplication mask

mul rdx ; Replicate al in all rax register (rdx is erased)

mov rdx, rcx ; rdx = n

%endmacro

; Macro used to set less than a quadword

; The macro take two parameters:

; - A register containing the byte count to copy

; - A register containing the current 8-byte aligned offset

%macro memset_epilog_qword 2

cmp %1, 4

jb %%word

mov [rdi + %2], eax

add %2, 4

sub %1, 4

%%word:

cmp %1, 2

jb %%byte

mov [rdi + %2], ax

add %2, 2

sub %1, 2

%%byte:

test %1, %1

jz %%exit

mov [rdi + %2], al

%%exit:

%endmacro

; void *memset_stosq(rdi: void s[.n], rsi: int c, rdx: size_t n);

memset_stosq:

setup_memset_rax

mov rsi, rdi ; rsi = s

cmp rdx, 8 ; if(n < 8)

jb .end ; goto .end

mov rcx, rdi ; rcx = s

add rdx, rdi ; rdx = dst + n

stosq ; *(rdi++) = rax

and rdi, -8 ; rdi = align(dst + 8, 8)

sub rdx, rdi ; rdx = dst + n - align(dst + 8, 8)

mov rcx, rdx ; rcx = dst + n - align(dst + 8, 8)

and rdx, (8 - 1) ; rdx = (dst + n - align(dst + 8, 8)) % 8

shr rcx, 3 ; rcx = (dst + n - align(dst + 8, 8)) / 8

rep stosq ; for(; rcx != 0; rcx -= 1)

; *(rdi++) = rax

.end:

xor ecx, ecx

memset_epilog_qword rdx, rcx

.exit:

mov rax, rsi ; Return s

ret

; void *memset_movq(rdi: void s[.n], rsi: int c, rdx: size_t n);

memset_movq:

cmp rdx, 8

jb .end

setup_memset_rax

xor ecx, ecx

.loop:

mov [rdi + rcx], rax

add rcx, 8

sub rdx, 8

cmp rdx, 8

jae .loop

.end:

memset_epilog_qword rdx, rcx

mov rax, rdi

ret

; Macro used to set less than a 16 bytes

; The macro take two parameters:

; - A register containing the byte count to copy

; - A register containing the current 16-byte aligned offset

%macro memset_epilog_avx 2

vmovq rax, xmm0

cmp %1, 8

jb %%dword

mov [rdi + %2], rax

add %2, 8

sub %1, 8

%%dword:

memset_epilog_qword %1, %2

%endmacro

; void *memset_avx(rdi: void s[.n], rsi: int c, rdx: size_t n);

memset_avx:

movzx esi, sil ; Copy sil in eax with zero-extend

vmovd xmm0, esi ; Copy the low dword (eax) into xmm0

vpxor xmm1, xmm1 ; Load a shuffle mask filled with zero indexes

vpshufb xmm0, xmm0, xmm1 ; Broadcast the first byte of xmm0 to all xmm0 bytes

cmp rdx, 16

jb .end

vmovdqu [rdi], xmm0

lea rcx, [rdi + 15] ; rcx = src + 15

and rcx, -16 ; rcx = align((src + 15), 16)

sub rcx, rdi ; rcx = align((src + 15), 16) - src

sub rdx, rcx ; rdx = src + n - align((src + 15), 16)

cmp rdx, 16

jb .end

.loop:

vmovdqa [rdi + rcx], xmm0 ; Copy the 16 bytes of xmm0 to s

add rcx, 16 ; rcx += 16

sub rdx, 16 ; rdx -= 16

cmp rdx, 16 ; if(rdx >= 16)

jae .loop ; goto .loop

.end:

memset_epilog_avx rdx, rcx

.exit:

mov rax, rdi ; rax = s

ret

; Macro used to set less than a 32 bytes

; The macro take two parameters:

; - A register containing the byte count to copy

; - A register containing the current 32-byte aligned offset

%macro memset_epilog_avx2 2

cmp %1, 16

jb %%qword

vmovdqu [rdi + %2], xmm0

add %2, 16

sub %1, 16

%%qword:

memset_epilog_avx %1, %2

%endmacro

; void *memset_avx2(rdi: void s[.n], rsi: int c, rdx: size_t n);

memset_avx2:

xor ecx, ecx

movzx esi, sil ; Copy sil in eax with zero-extend

vmovd xmm1, esi ; Copy the low dword (eax) into xmm0

vpbroadcastb ymm0, xmm1 ; Broadcast the first byte of xmm1 to all ymm0

cmp rdx, 32

jb .end

vmovdqu [rdi], ymm0

lea rcx, [rdi + 31] ; rcx = src + 31

and rcx, -32 ; rcx = align((src + 31), 32)

sub rcx, rdi ; rcx = align((src + 31), 32) - src

sub rdx, rcx ; rdx = src + n - align((src + 31), 32)

cmp rdx, 32 ; if(rdx < 32)

jb .end ; goto .end

.loop:

vmovdqa [rdi + rcx], ymm0 ; Copy the 32 bytes of ymm0 to s

add rcx, 32 ; rcx += 32

sub rdx, 32 ; rdx -= 32

cmp rdx, 32 ; if(rdx >= 32)

jae .loop ; goto .loop

.end:

memset_epilog_avx2 rdx, rcx

mov rax, rdi ; rax = s

vzeroupper

retOnce again, I wrote 6 different versions of the memset function: an unoptimized for loop, the glibc memset function, rep stosb, rep stosq, and the AVX and AVX2 extensions.

void *__attribute__((optimize("O1"))) memset_dummy(void *dst, unsigned int c,

unsigned long n) {

void *const ret = dst;

for (int i = 0; i < n; i++)

*((char *)dst++) = (char)c;

return ret;

}section .text

; void *memset_stosb(rdi: void s[.n], rsi: int c, rdx: size_t n);

memset_stosb:

mov rcx, rdx ; rcx = n

movzx eax, sil ; eax = c

mov rsi, rdi ; rdx = s

rep stosb ; for(; rcx != 0; rcx--)

; *(rdi++) = al

mov rax, rsi ; return s

ret; void *memset_stosb_std(rdi: void s[.n], rsi: int c, rdx: size_t n);

memset_stosb_std:

std ; Set direction flag

movzx eax, sil ; eax = c

mov rsi, rdi ; rsi = s

mov rcx, rdx ; rcx = n

sub rdx, 1 ; rdx = n - 1

add rdi, rdx ; rdi = s + (n - 1)

rep stosb ; for(; rcx != 0; rcx--)

; *(rdi--) = al

mov rax, rsi ; return s

cld ;

ret; void *memset_movb(rdi: void s[.n], rsi: int c, rdx: size_t n);

memset_movb:

test rdx, rdx ; if(rdx == 0)

jz .exit ; goto .exit

xor ecx, ecx ; rcx = 0

.loop:

mov [rdi + rcx], sil ; s[rcx] = sil

add rcx, 1 ; rcx++

sub rdx, 1 ; rdx--

jnz .loop ; if(rdx != 0) goto .loop

.exit:

mov rax, rdi ; return s

ret; Macro used to set less than a quadword

; The macro take two parameters:

; - A register containing the byte count to copy

; - A register containing the current 8-byte aligned offset

%macro memset_epilog_qword 2

cmp %1, 4

jb %%word

mov [rdi + %2], eax

add %2, 4

sub %1, 4

%%word:

cmp %1, 2

jb %%byte

mov [rdi + %2], ax

add %2, 2

sub %1, 2

%%byte:

test %1, %1

jz %%exit

mov [rdi + %2], al

%%exit:

%endmacro

; void *memset_stosq(rdi: void s[.n], rsi: int c, rdx: size_t n);

memset_stosq:

setup_memset_rax

mov rsi, rdi ; rsi = s

cmp rdx, 8 ; if(n < 8)

jb .end ; goto .end

mov rcx, rdi ; rcx = s

add rdx, rdi ; rdx = dst + n

stosq ; *(rdi++) = rax

and rdi, -8 ; rdi = align(dst + 8, 8)